Patrick Hall - Real-World Strategies for Model Debugging

Speaker Bio -

Patrick Hall is the Principal Scientist at bnh.ai.

Talk Abstract -

You used cross-validation, early stopping, grid search, monotonicity constraints, and regularization to train a generalizable, interpretable, and stable model. Its fit statistics look just fine on out-of-time test data, and better than the linear model it's replacing. You selected your probability cutoff based on business goals and you even containerized your model to create a real-time scoring engine for your pals in information technology (IT). Time to deploy?

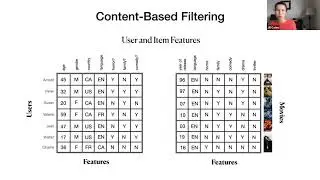

Not so fast. Unfortunately, current best practices for machine learning (ML) model training and assessment can be insufficient for high-stakes, real-world ML systems. Much like other complex IT systems, ML models must be debugged for logical or run-time errors and security vulnerabilities. Recent, high-profile failures have made it clear that ML models must also be debugged for disparate impact and other types of discrimination.

This presentation introduces model debugging, an emergent discipline focused on finding and fixing errors in the internal mechanisms and outputs of ML models. Model debugging attempts to test ML models like code (because they are usually code). It enhances trust in ML directly by increasing accuracy in new or holdout data, by decreasing or identifying hackable attack surfaces, or by decreasing discrimination. As a side-effect, model debugging should also increase the understanding and interpretability of model mechanisms and predictions.

Want a sneak peek of some model debugging strategies? Check out these open resources: https://towardsdatascience.com/strate....

Смотрите видео Patrick Hall - Real-World Strategies for Model Debugging онлайн, длительностью часов минут секунд в хорошем качестве, которое загружено на канал Toronto Machine Learning Series (TMLS) 16 Август 2023. Делитесь ссылкой на видео в социальных сетях, чтобы ваши подписчики и друзья так же посмотрели это видео. Данный видеоклип посмотрели 504 раз и оно понравилось 6 посетителям.