Acoustic VR of Human Tongue: Real-time Speech-driven Visual Tongue System

Acoustic VR of Human Tongue: Real-time Speech-driven Visual Tongue System

Ran Luo*, Qiang Fang* (equal contributors), Jianguo Wei, Weiwei Xu, Yin Yang

IEEE VR, 2017

====================

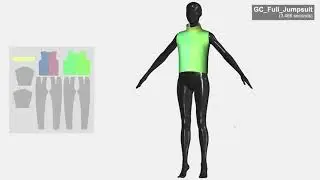

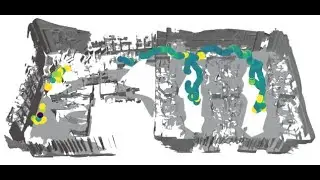

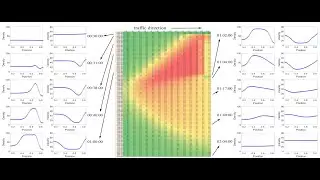

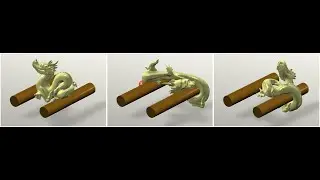

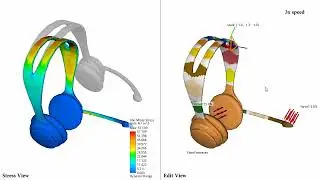

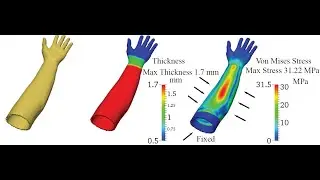

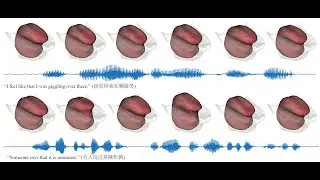

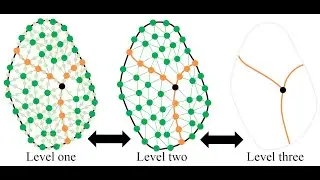

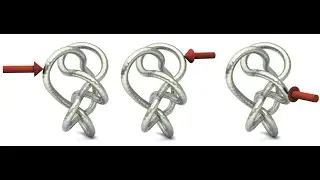

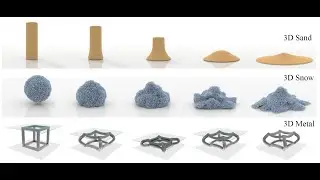

We propose an acoustic-VR system that converts acoustic signals of human language (Chinese) to realistic 3D tongue animation sequences in real time. It is known that directly capturing the 3D geometry of the tongue at a frame rate that matches the tongue's swift movement during the language production is challenging. This difficulty is handled by utilizing the electromagnetic articulography (EMA) sensor as the intermediate medium linking the acoustic data to the simulated virtual reality. We leverage Deep Neural Networks to train a model that maps the input acoustic signals to the positional information of pre-defined EMA sensors based on 1,108 utterances. Afterwards, we develop a novel reduced physics-based dynamics model for simulating the tongue's motion. Unlike the existing methods, our deformable model is nonlinear, volume-preserving, and accommodates collision between the tongue and the oral cavity (mostly with the jaw). The tongue's deformation could be highly localized which imposes extra difficulties for existing spectral model reduction methods. Alternatively, we adopt a spatial reduction method that allows an expressive subspace representation of the tongue's deformation. We systematically evaluate the simulated tongue shapes with real-world shapes acquired by MRI/CT. Our experiment demonstrates that the proposed system is able to deliver a realistic visual tongue animation corresponding to a user's speech signal.

Смотрите видео Acoustic VR of Human Tongue: Real-time Speech-driven Visual Tongue System онлайн, длительностью часов минут секунд в хорошем качестве, которое загружено на канал Yin Yang 14 Апрель 2024. Делитесь ссылкой на видео в социальных сетях, чтобы ваши подписчики и друзья так же посмотрели это видео. Данный видеоклип посмотрели 17 раз и оно понравилось 0 посетителям.