Pre-Trained Multilingual Sequence to Sequence Models for NMT Tips, Tricks and Challenges

Speaker:

Annie En-Shiun Lee, Assistant Professor (Teaching Stream) for the Computer Science Department, University of Toronto

Abstract:

Neural Machine Translation (NMT) has seen a tremendous spurt of growth in less than ten years, and has already entered a mature phase. Pre-trained multilingual sequence-to-sequence (PMSS) models, such as mBART and mT5, are pre-trained on large general data, then fine-tuned to deliver impressive results for natural language inference, question answering, text simplification and neural machine translation.

This tutorial presents:

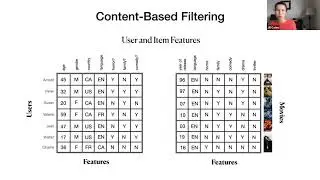

1) An Introduction to Sequence-to-Sequence Pre-trained Models

2) How to adapt pre-trained models for NMT

3) Tips and Tricks for NMT training and evaluation

4) Challenges/Problems faced when using these models.

This tutorial will be useful for those interested in NMT, from a research as well as industry point of view.

Смотрите видео Pre-Trained Multilingual Sequence to Sequence Models for NMT Tips, Tricks and Challenges онлайн, длительностью часов минут секунд в хорошем качестве, которое загружено на канал Toronto Machine Learning Series (TMLS) 16 Август 2023. Делитесь ссылкой на видео в социальных сетях, чтобы ваши подписчики и друзья так же посмотрели это видео. Данный видеоклип посмотрели 172 раз и оно понравилось 2 посетителям.

![T2 HIRST - BOOM & MXCABRAZIL - VINGANÇA DEMONÍACA PHONK [mashup]](https://images.reviewsvideo.ru/videos/_y1fg1T9p5c)