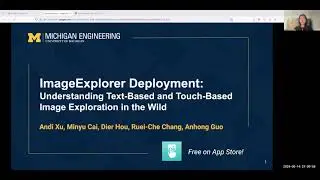

ImageExplorer Deployment: Understanding Text-Based and Touch-Based Image Exploration in the Wild

ImageExplorer Deployment: Understanding Text-Based and Touch-Based Image Exploration in the Wild

W4A 2024, Talk

Authors:

Andi Xu, Minyu Cai, Dier Hou, Ruei-Che Chang, Anhong Guo

Abstract:

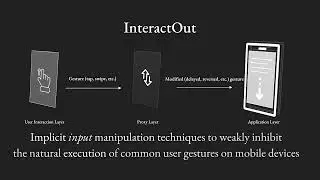

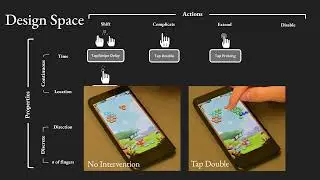

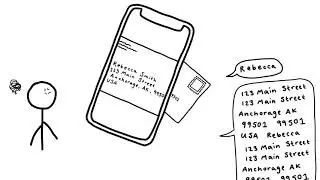

Blind and visually-impaired (BVI) users often rely on alt-texts to understand images. AI-generated alt-texts can be scalable and efficient but may lack details and are prone to errors. Multi-layered touch interfaces, on the other hand, can provide rich details and spatial information, but may take longer to explore and cause higher mental load. To understand how BVI users leverage these two methods, we deployed ImageExplorer, an iOS app on the Apple App Store that provides multi-layered image information via both text-based and touch-based interfaces with customizable levels of granularity. Across 12 months, 371 users uploaded 651 images and explored 694 times. Their activities were logged to help us understand how BVI users consume image captions in the wild. This work informs a holistic understanding of BVI users' image exploration behavior and influential factors. We provide design implications for future models of image captioning and visual access tools.

Paper available at: https://guoanhong.com/papers/W4A2024-...

The ImageExplorer app is publicly available at: https://imageexplorer.org/

Watch video ImageExplorer Deployment: Understanding Text-Based and Touch-Based Image Exploration in the Wild online, duration hours minute second in high quality that is uploaded to the channel Anhong Guo 26 May 2024. Share the link to the video on social media so that your subscribers and friends will also watch this video. This video clip has been viewed 43 times and liked it 1 visitors.

![[YTP] BrayaB Wyatt ends a RawR with MaM Hardy {WWE}](https://images.reviewsvideo.ru/videos/l60P8SIe5yc)

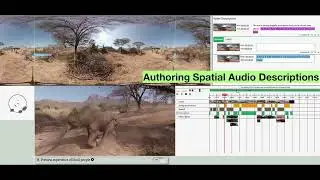

![[ASSETS 2024] Audio Description Customization](https://images.reviewsvideo.ru/videos/Ijb3QeOdkhI)

![[UIST 2024] ProgramAlly: Custom Visual Access Programs via Multi-Modal End-User Programming (Video)](https://images.reviewsvideo.ru/videos/NPECbf0l9-4)

![[UIST 2024] ProgramAlly: Custom Visual Access Programs via Multi-Modal End-User Programming (30s)](https://images.reviewsvideo.ru/videos/3lgh7MiaejM)

![[UIST 2024] VRCopilot: Authoring 3D Layouts with Generative AI Models in VR (30s Preview)](https://images.reviewsvideo.ru/videos/mryaZzTSmXw)

![[UIST 2024] VRCopilot: Authoring 3D Layouts with Generative AI Models in VR (Video)](https://images.reviewsvideo.ru/videos/95xd8oF3Rik)

![[UIST 2024] WorldScribe: Towards Context-Aware Live Visual Descriptions (30s Preview)](https://images.reviewsvideo.ru/videos/zpN85oFrSOE)